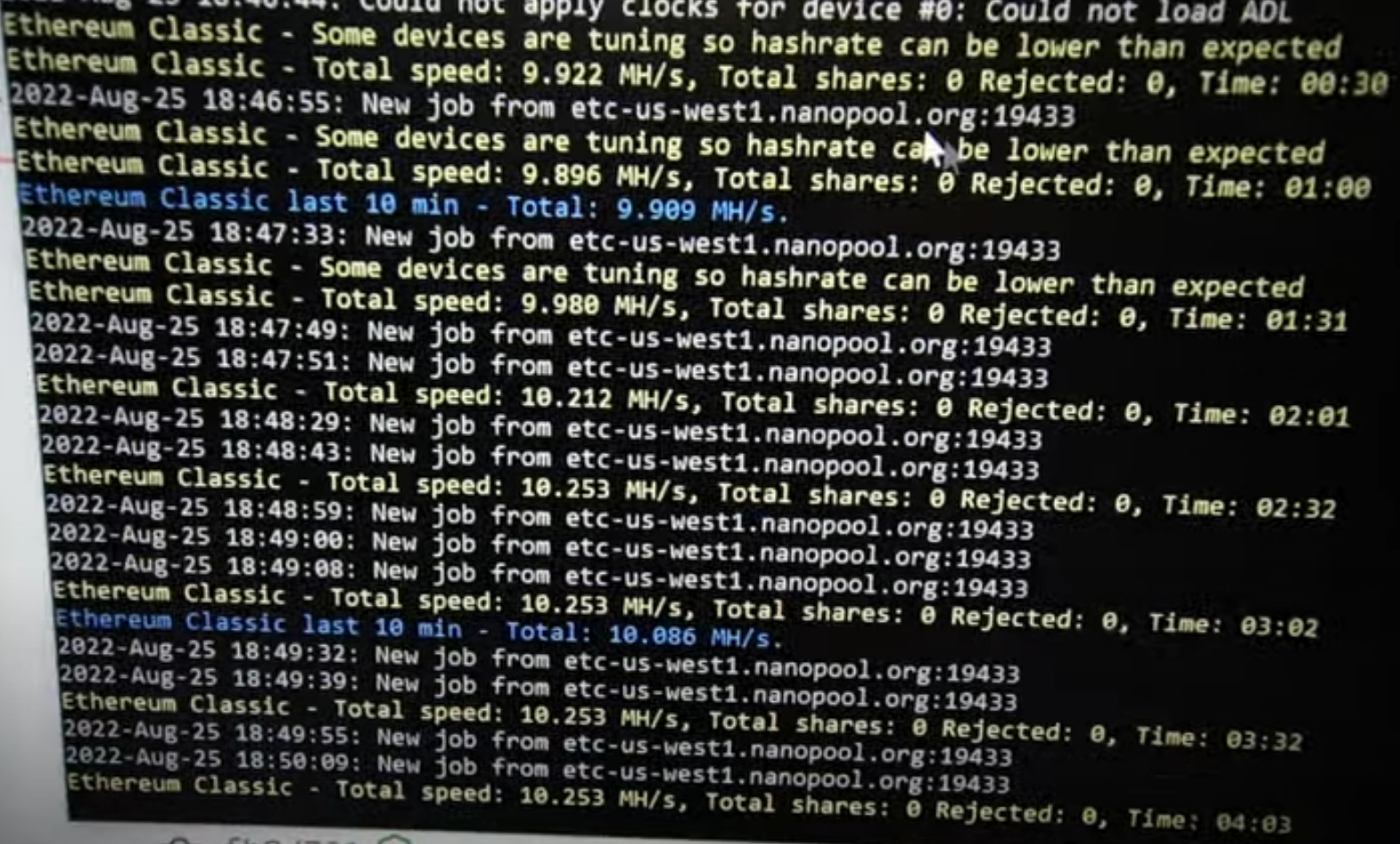

在最新版本的 Nanominer 3.7.0 中,出现了对 intel 新独立显卡的支持。 只有英特尔A380显卡的初始线上市销售,所以以太坊挖矿的测试结果只知道它。 英特尔 ARC A580、A750 和 A780 的更高性能版本将在以后推出。 同时,您可以在较年轻的模型上评估英特尔 GPU 的架构,并推断结果以获得更强大的解决方案。

在最新版本的 Nanominer 3.7.0 中,出现了对 intel 新独立显卡的支持。 只有英特尔A380显卡的初始线上市销售,所以以太坊挖矿的测试结果只知道它。 英特尔 ARC A580、A750 和 A780 的更高性能版本将在以后推出。 同时,您可以在较年轻的模型上评估英特尔 GPU 的架构,并推断结果以获得更强大的解决方案。

Nanomioner 开发人员自己指出,英特尔 ARC 显卡在以太坊挖矿中的性能为 10.2Mh/s,无需超频。

网上已经出现了独立测试,也证实了英特尔 ARC A380 显卡的算力在 10Mh/s,功耗为 70-75W。那些。 A380显卡的能效为7.5W/MH,对于现代显卡来说是极低的。作为对比,在挖矿中流行的显卡 Nvidia GTX 1660 Super 显示出该学科的 3W/MH 成绩,并且在 3 年前的 2019 年发布。

您可以在此处比较所有显卡在采矿中的能源效率 Profit-mine.com(首先您需要选择一种加密货币进行比较)。

如你所知,以太坊挖矿时的算力主要受内存带宽的影响,而这里英特尔显卡有明显的问题,因为。采用96位内存总线,带宽仅为192Gbit/s(GTX 1660 super - 336Gbit/s)。但即使我们考虑到英特尔产品生产力较低的内存子系统,那么无论如何,为了达到三年前的竞争对手水平,A380 显卡必须具有高 75% 的哈希率,即 17.5Mh/s .

作为结论,我们可以说英特尔ARC A380显卡作为挖矿显卡的首次亮相并不成功。此故障的原因可能多种多样,从原始驱动程序、Nanominer 矿工中未优化的代码,到英特尔工程师在 GPU 本身设计中的技术错误计算。

Independent tests have already appeared on the Internet, which also confirm the hashrate of the Intel ARC A380 video card at 10Mh/s with a power consumption of 70-75W. Those. The energy efficiency of the A380 video card is 7.5W/MH, which is extremely low for modern video cards. For comparison, the popular video cards in mining Nvidia GTX 1660 Super show a result of 3W/MH in this discipline, and it was released 3 years ago in 2019.

You can compare all video cards in terms of energy efficiency in mining here (first you need to choose a cryptocurrency for comparison).

As you know, the hashrate during Ethereum mining is primarily affected by the memory bandwidth, and here the Intel video card has obvious problems, because. with a 96-bit memory bus, the bandwidth is only 192Gbit/s (GTX 1660 super - 336Gbit/s). But even if we take into account the less productive memory subsystem of the Intel product, then anyway, in order to be at the level of competitors three years ago, the A380 video card must have a 75% higher hashrate, i.e. 17.5Mh/s.

As a conclusion, we can state the unsuccessful debut of the Intel ARC A380 video card as a mining video card. The reasons for this failure can vary from raw drivers, unoptimized code in the Nanominer miner, to technical miscalculations in the design of the GPU itself by Intel engineers.