In the latest version of Nanominer 3.7.0, support for new discrete graphics cards from intel has appeared. only the initial line of intel A380 video cards appeared on sale, so the test results in Ethereum mining are known only for it. Higher performance versions of the Intel ARC A580, A750 and A780 will be available at a later date. In the meantime, you can evaluate the architecture of Intel GPUs on the younger model and extrapolate the results for more powerful solutions.

In the latest version of Nanominer 3.7.0, support for new discrete graphics cards from intel has appeared. only the initial line of intel A380 video cards appeared on sale, so the test results in Ethereum mining are known only for it. Higher performance versions of the Intel ARC A580, A750 and A780 will be available at a later date. In the meantime, you can evaluate the architecture of Intel GPUs on the younger model and extrapolate the results for more powerful solutions.

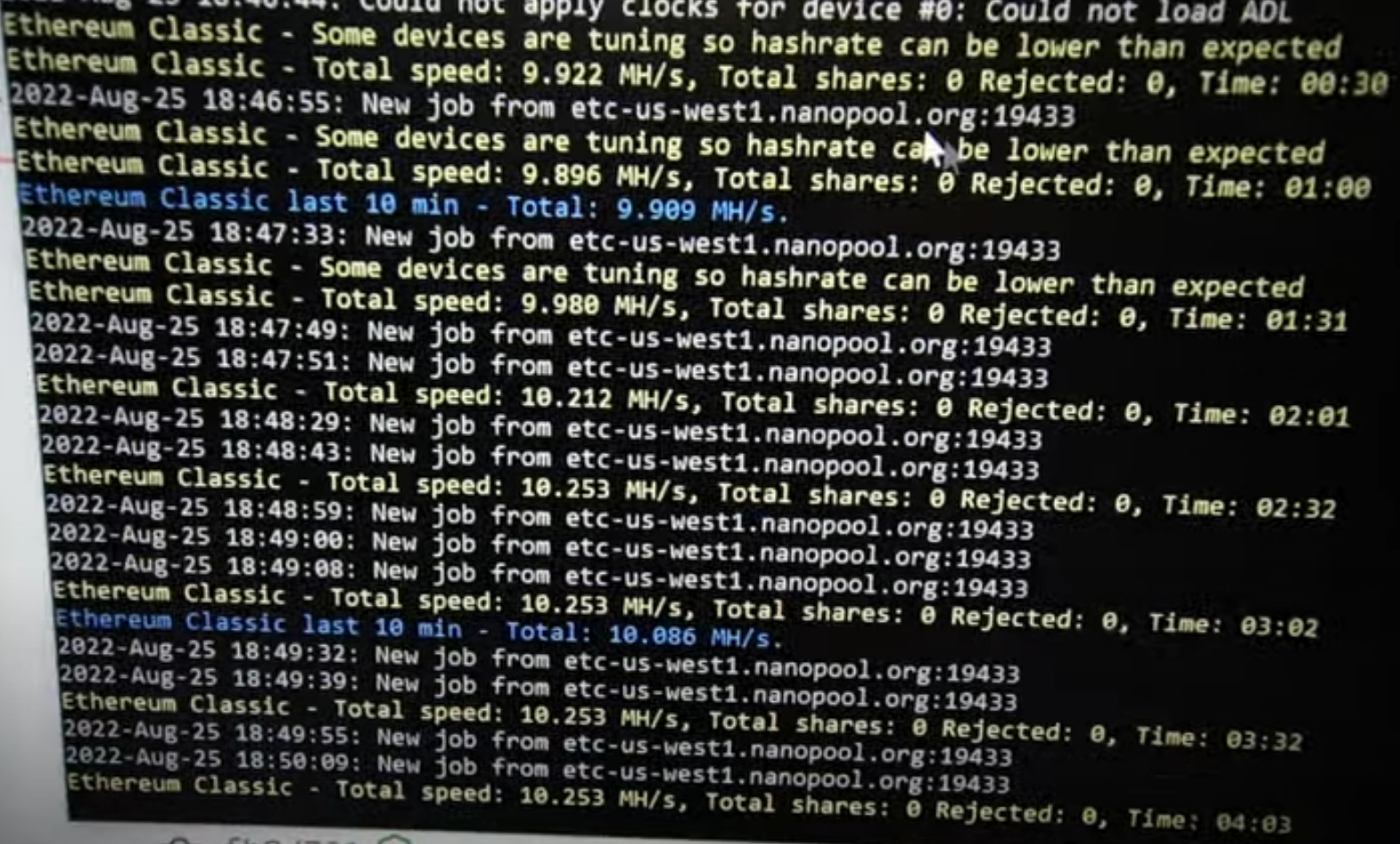

The Nanomioner developers themselves point to the performance of the Intel ARC video card in Ethereum mining at 10.2Mh/s without overclocking.

Independent tests have already appeared on the Internet, which also confirm the hashrate of the Intel ARC A380 video card at 10Mh/s with a power consumption of 70-75W. Those. The energy efficiency of the A380 video card is 7.5W/MH, which is extremely low for modern video cards. For comparison, the popular video cards in mining Nvidia GTX 1660 Super show a result of 3W/MH in this discipline, and it was released 3 years ago in 2019.

You can compare all video cards in terms of energy efficiency in mining here (first you need to choose a cryptocurrency for comparison).

As you know, the hashrate during Ethereum mining is primarily affected by the memory bandwidth, and here the Intel video card has obvious problems, because. with a 96-bit memory bus, the bandwidth is only 192Gbit/s (GTX 1660 super - 336Gbit/s). But even if we take into account the less productive memory subsystem of the Intel product, then anyway, in order to be at the level of competitors three years ago, the A380 video card must have a 75% higher hashrate, i.e. 17.5Mh/s.

As a conclusion, we can state the unsuccessful debut of the Intel ARC A380 video card as a mining video card. The reasons for this failure can vary from raw drivers, unoptimized code in the Nanominer miner, to technical miscalculations in the design of the GPU itself by Intel engineers.